"The meeting was somewhere in Seattle," said Don Alvarez. "I don't remember which building exactly, but I do remember precisely how I felt walking out afterwards. My head was exploding. I couldn't believe the incredible power Amazon had just put at my fingertips."

Alvarez—an engineering expert with a PhD in Physics, who counted three trips to the South Pole to "study the glow leftover from the Big Bang" as part of his academic experience—had come across his fair share of mind-bending concepts in the past. Even for him, this was different.

The year was 2006, and Alvarez was one of the very first people in the world to see, and test out, Amazon Simple Storage Service (S3), at that time a confidential beta project to enable the storage and retrieval of any amount of data, from anywhere on the web.

It sounds deceptively straightforward. But 15 years ago, it represented a breakthrough in computing that would, when made public, revolutionize the way we live and work.

Back then, leaving the Amazon offices in downtown Seattle, Alvarez did not know that the yet-to-be-released product he'd just been given a glimpse of would become the first building block of AWS.

What he did know was that he had access to something that was "solving the biggest problem pretty much any startup, anywhere in the world faced: what do we do about all this data?"

Alvarez's own startup, FilmmakerLive—a concept for helping moviemakers storyboard their projects online in remote collaboration with others—had been, until that point, more of a vision than a reality. The main concern for him and his co-founders was how to store the content their future customers were going to create, until they were introduced to S3.

"It just seemed like a thing we desperately needed, that lots of people desperately needed," said Nathan McFarland, founder of CastingWords, which along with FilmmakerLive was one of the original three customers named in the press release announcing the launch of AWS on March 14, 2006.

CastingWords advertised itself as a podcast transcription service, but really it was catering to the same customers as the traditional transcription companies with which it was then competing.

"Podcasts did exist, but they were still quite new," said McFarland. "We actually had very few podcasters as customers. Instead, it was lots of radio shows, pastors, preachers, and market researchers. Aligning ourselves with podcasts was our way of signaling that we were modern and doing things differently."

At that time, conventional transcription services quoted jobs on a case-by-case basis, usually requiring people to send in MP3s or CDs they had burned, by mail, which would then be transcribed, with the transcription returned by email. It was a lengthy and expensive process, and one CastingWords was trying to disrupt by moving it entirely online and charging a flat fee.

For McFarland, the invitation to take part in the beta testing phase of Amazon S3 was fortuitous. The main server CastingWords relied on to store its data—a small machine with "about six spinning discs in a room somewhere in San Francisco"—had recently crashed, at near catastrophic cost to the company.

"It interrupted the business for weeks," he said "We had a lot of angry customers and a lot of audio that went missing, which we weren't sure we could ever recover."

Before CastingWords' server went down, McFarland had already signed the confidentiality agreement to access S3 but hadn't tried to use it yet. With his business facing a "major crisis," he arranged for someone to fly to the Bay Area, pull the hard disks from the broken machine, and bring them back to Portland, where he was living. He took the data and put it straight onto the new service Amazon had asked him to test.

"As soon as we moved to S3, the costs of storage and bandwidth alone, not to mention maintaining servers, just vanished overnight," he said. "We realized pretty quickly there was so much it could take off our hands. It did this one thing that had been such a huge pain point for us: storing many types of files of varying size and allowing us to locate these files and provide them to people at any given time—and it did it perfectly. There was literally nothing else like it."

While McFarland and Alvarez were essentially trying to build systems that could manage potentially untold amounts of information, the third customer mentioned in the AWS press release already understood how much content they were dealing with and the gargantuan task that lay ahead.

The Stardust@home project at University of California, Berkeley, launched in 2006 with a call for interested people, anywhere in the world, to join a very specific hunt for particles from outside our solar system, the "stardust" from which we are all made.

Andrew Westphal, Associate Director at UC Berkeley's Space Sciences Laboratory who has led the project since its inception 15 years ago, was part of the scientific team working on the NASA Stardust mission. The space probe had collected interstellar dust in a substance called aerogel, a "bizarre, transparent material, almost like solid smoke," and successfully brought it back to Earth for study for the first time.

It was a major coup for the scientists, who expected to have a few dozen interstellar dust particles somewhere in the samples. The kicker was that in order to analyze the particles, they first had to find them. At roughly the size of a micron—one millionth of a meter—the particles could only be located by looking through the aerogel with a microscope, at extremely high magnification.

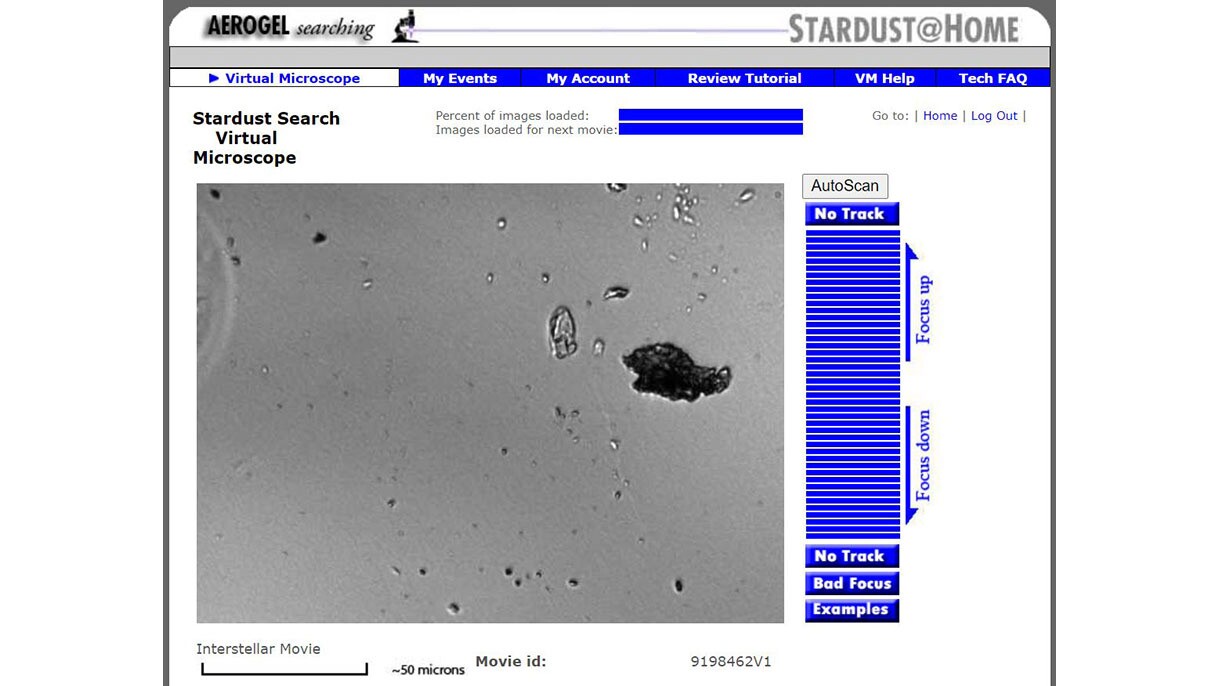

Volunteers can focus the virtual microscope by moving their mouse up and down.

Volunteers can focus the virtual microscope by moving their mouse up and down."What this meant in practice was sorting through images. Millions and millions of images," said Westphal. "We did the math and realized how many fields of view we were going to have to search. It was pretty daunting for a small research group like ours."

Inspired by the massively distributed computing projects taking off during that era, Westphal and team decided to set up "a distributed thinking project." Their plan was to crowdsource thousands of pairs of eyes, belonging to willing members of the public, who (with some basic training) could learn to identify distinctive trails in the aerogel that indicated the presence of a particle.

"It's a three-dimensional problem," Westphal explains. "If you were using a microscope, you would be focusing up and down to look through the aerogel. One of our team developed a virtual microscope that replicates this mechanism, enabling anyone with a computer to search through a stack of images, simply by running their mouse up and down."

In order to make these millions of images available to all the would-be galactic investigators they hoped to enroll in the project, they needed somewhere fast, reliable, and cost-effective to store them. The answer was Amazon S3.

Today, the Stardust@home project is still going strong, with more than 34,000 people having contributed to about 130 million searches in total. And they are still not done.

"So far we have identified four interstellar dust particles," explains Westphal. "We were expecting to find a lot more. Scientifically, as so often happens, we've raised more questions than we have answers. But when I think about what we've accomplished with this 'citizen science' approach to research, it's incredible. And it was enabled by what we now know today as the cloud."

One of the millions of images in the Stardust@home project.

One of the millions of images in the Stardust@home project.Westphal, as with Alvarez and McFarland, had no idea who else had been using Amazon S3 in the beta testing phase until the press release came out on that day in March.

That three such different projects shared a need for the same simple service, which no one else was then providing, seems in hindsight a clear indication of what was to come. In 2006, the signs were a little harder to read.

"I could see how a lot of companies that needed to keep track of digital assets would benefit from Amazon S3," said McFarland. "What wasn't obvious to me then was just how many different types of organizations, everywhere in the world, would have a use for it."

"It totally changed how people build businesses," he said. "You no longer needed to find tens of thousands of dollars to invest in hardware when you weren't even sure if something was going to work. It just de-risked everything. You could store all the data you needed on S3, and things that you couldn't have run on your personal computer before suddenly became doable. It completely transformed our understanding of what was possible."

This year is the 15th anniversary of AWS. Learn more about the history of the cloud.

Trending news and stories