Amazon Web Services (AWS) and Mistral AI, a leading artificial intelligence (AI) startup from France, today announced that Mistral Large is publicly available on Amazon Bedrock. Last month AWS added Mistral 7B and Mixtral 8x7B—two high-performing Mistral AI models—to Amazon Bedrock, and now, Mistral Large. Mistral Large, the latest and most advanced large language model (LLM) from Mistral AI, will offer more choice to customers for building their generative AI applications.

Amazon Bedrock is AWS’s fully managed service that provides secure, easy access to the industry’s widest choice of high-performing, fully managed foundation models (FMs), along with the most compelling set of features (including best-in-class retrieval augmented generation, guardrails, model evaluation, and AI-powered agents) that help customers build highly-capable, cost-effective, and low latency generative AI applications. As part of the collaboration, Mistral AI will use AWS's powerful, purpose-built AI chips—AWS Trainium and AWS Inferentia—to help customers bring together a highly accurate FM with trusted enterprise-grade capabilities using Amazon Bedrock.

With today's announcement, Mistral Large joins other industry-leading models on Amazon Bedrock, such as Anthropic's Claude 3 models, making AWS the only cloud provider to offer the most popular and advanced FMs to customers.

Mistral AI is the latest AI company to bring all of their advanced FM technologies to Amazon Bedrock

Mistral Large is a cutting-edge text generation model from Mistral AI that provides top-tier reasoning capabilities for complex multilingual reasoning tasks, including text understanding, transformation, and code generation. Customers can use Mistral Large to articulate conversations, generate nuanced content, and tackle complex reasoning tasks. The model’s strengths also extend to coding, with proficiency in code generation, review, and comments across mainstream coding languages. Mistral Large is fluent in English, French, Spanish, German, and Italian, with a nuanced understanding of grammar and cultural context.

Mistral Large, along with other Mistral AI models (Mistral 7B and Mixtral 8x7B), is available today on Amazon Bedrock in the US East (N. Virginia), US West (Oregon), and EU-West-3 (Paris) Regions. View the full region list for future updates. Learn more about Mistral AI on Amazon Bedrock.

“By bringing Mistral AI models to Amazon Bedrock, customers will have access to the most cutting-edge and advanced generative AI technologies as well as easy access to enterprise-grade tooling and features all in a secure and private environment,” said Vasi Philomin, vice president of generative AI, AWS. “The Mistral AI team's ability to deliver game-changing innovations in such a swift manner is truly commendable. As they deepen their ties with AWS, we are thrilled to collaborate to propel the advancement of their groundbreaking technology even further.”

“We are excited to announce our collaboration with AWS to accelerate the adoption of our frontier AI technology with organizations around the world,” said Arthur Mensch, CEO, Mistral AI. “Our mission is to make frontier AI ubiquitous, and to achieve this mission, we want to collaborate with the world's leading cloud provider to distribute our top-tier models. We have a long and deep relationship with AWS and through strengthening this relationship today, we will be able to provide tailor-made AI to builders around the world."

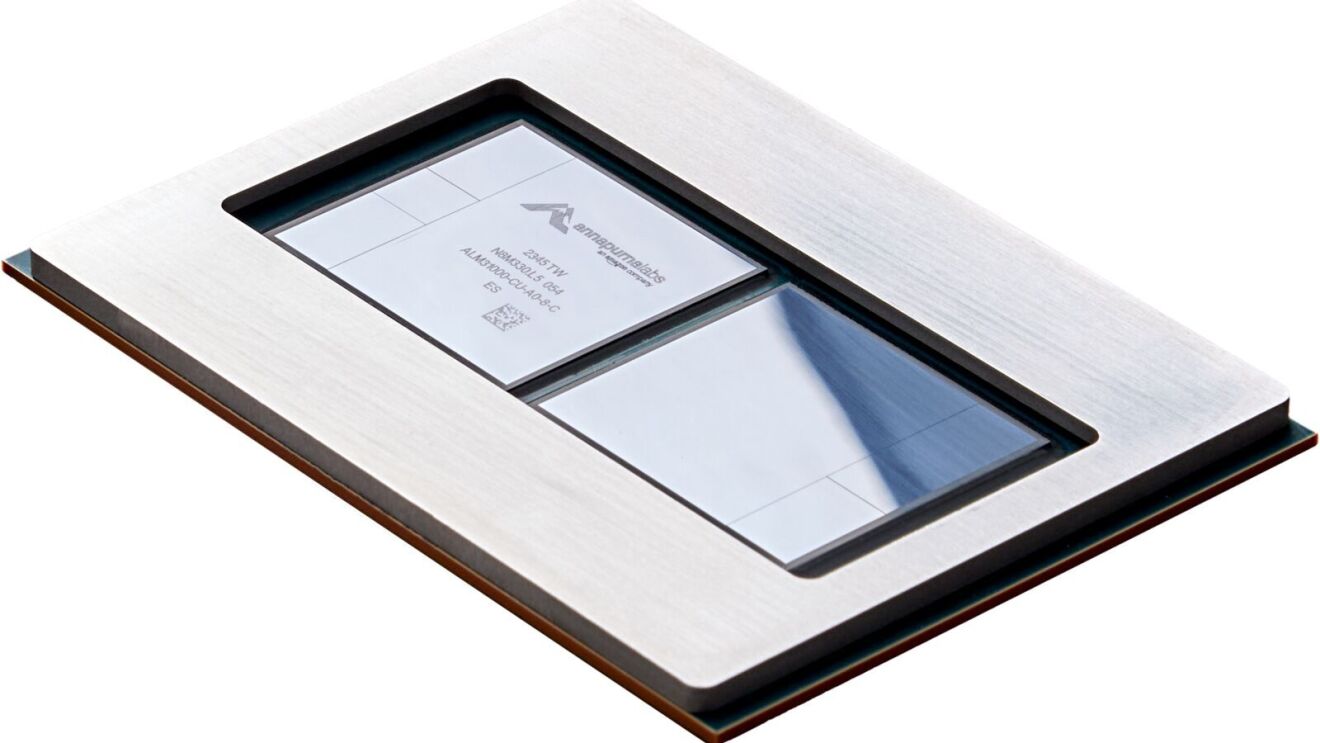

Mistral AI will use AWS’s powerful, purpose-built AI chips to accelerate generative AI for customers

With the increasing adoption of generative AI by organizations, there is a need for powerful and cost-efficient chips that are capable of running the largest models at scale. With this collaboration, Mistral AI will use AWS AI-optimized Trainium and Inferentia chips to build and deploy its future foundation models on Amazon Bedrock, benefitting from the price, performance, scale, and security of AWS.

AWS Trainium2

AWS Trainium2Amazon Bedrock now available using infrastructure based in France

Global organizations of all sizes across virtually every industry are using Amazon Bedrock to build and scale generative AI applications and starting today, they can do so using AWS infrastructure based in France. With Amazon Bedrock now available in France, customers can access the latest and most advanced LLMs and FMs from the world's leading model providers, such as Anthropic, AI21 Labs, Cohere, Meta, Mistral AI, Stability AI, and also Amazon, with the knowledge that their data will remain private, secure, and in France.

Many organizations are already using Amazon Bedrock to build their generative AI applications include leading names such as adidas, ADP, Alida, Amdocs, Asurion, Automation Anywhere, Blueshift, BMW Group, Booking.com, Bridgewater Associates, Broadridge, CelcomDigi, Clariant, Cloudera, Coinbase, Cox Automotive, Dana-Farber Cancer Institute, Degas Ltd., Delta Air Lines, dentsu, Druva, Enverus, Genesys, Genomics England, Gilead, Glide Publishing Platform, GoDaddy, Happy Fox, Hellmann Worldwide Logistics, INRIX, Intuit, KONE, KT, LexisNexis Legal & Professional, LivTech, Lonely Planet, M1 Finance, Merck, NatWest Group, Netsmart, Nexxiot, OfferUp, Omnicom, Parsyl, Perplexity AI, Persistent, Pfizer, the PGA TOUR, Plaid, Proofpoint, Proto Hologram, Quext, RareJob Technologies, Ricoh USA, Rocket Mortgage, Royal Philips, Salesforce, Siemens, SnapLogic, Takenaka Corporation, Traeger Grills, Ultima, United Airlines, Verint, Verisk, Wix, WPS, and more.

Learn more about Amazon Bedrock, discover how AWS purpose-built chips help accelerate generative AI for Mistral AI and other customers, and find out the Regions where Amazon Bedrock is available.

Trending news and stories

- Meet Project Rainier, Amazon’s one-of-a-kind machine ushering in the next generation of AI

- Amazon launches a new AI foundation model to power its robotic fleet and deploys its 1 millionth robot

- Project Kuiper and U.S. National Science Foundation sign satellite coordination agreement

- Amazon MGM Studios sets Denis Villeneuve as director of next James Bond film