Amazon’s expanded partnership with Anthropic is a pivotal development in our commitment to help organizations of all sizes unlock the power of generative artificial intelligence (generative AI) and provide them with options.

“Our customers need us to have great generative AI capabilities,” AWS CEO Adam Selipsky said on Bloomberg Technology. “We have a strategy for providing customers the choices for whatever’s best for the job at hand, so Anthropic is going to provide an amazing set of models to meet many customer use cases.”

So, what comes from this expanded collaboration with Anthropic? Here’s a quick summary, with a focus on our powerful, purpose-built AI chips—AWS Trainium and AWS Inferentia—and how they are at the center of our work with Anthropic.

Anthropic, a leading foundation model (FM) provider and advocate for the responsible deployment of generative AI, has been an AWS customer since 2021. Anthropic’s FM, named “Claude,” excels at thoughtful dialogue, content creation, complex reasoning, creativity, and coding, and it’s available to AWS customers through Amazon Bedrock. Foundation models, like Claude, are large machine learning models pre-trained on vast amounts of data that can be used to power generative AI applications.

What’s different with this expanded collaboration?

Through our expanded partnership, Anthropic made a long-term commitment to AWS customers around the world, with access to future generations of its foundation models with Amazon Bedrock. AWS customers will also get early access to unique features for model customizations and fine-tuning.

What does this have to do with AWS AI chips?

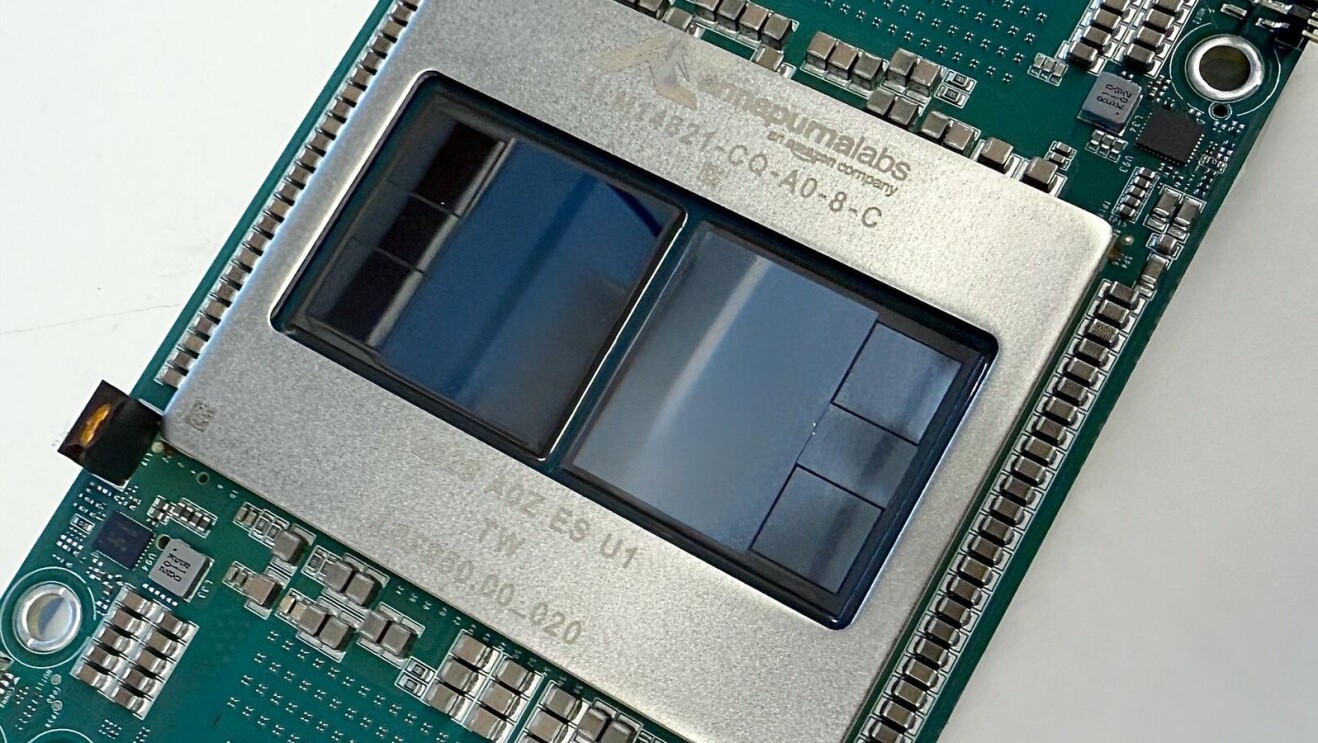

With AWS now Anthropic’s primary cloud provider—another key element of our expanded collaboration—Anthropic will train and deploy their future FMs on the AWS Cloud using our Trainium and Inferentia chips. The AWS chips will be the engines behind the FMs. In addition, Anthropic will collaborate with us in the development of future Trainium and Inferentia technology.

Why did Amazon develop Inferentia and Trainium?

Machine learning requires enormous amounts of computing power. With the increasing adoption of machine learning, AI, and now generative AI by so many customers, Amazon saw the need for chips that were both powerful and cost-efficient while using less energy. Trainium is a purpose-built chip for training deep learning models, with up to a 50% cost-to-train savings over comparable Amazon Elastic Compute Cloud (Amazon EC2) instances. The Inferentia chip enables models to generate inferences more quickly and at lower cost, with up to 40% better price performance.

How do our chips benefit customers and partners, like Anthropic?

Rather than building generative AI from scratch, data scientists start with FMs. These very large models, like Claude, can make the development of new generative AI applications cheaper and more efficient. Trainium, which AWS launched last year, is a high-performance machine learning chip specifically designed to reduce the time and cost of training generative AI models. It can cut training time for some models from months to hours. This also means that building new models requires less money and power, potentially saving up to 50% of costs and reducing energy consumption up to 29%, versus comparable instances. Our Inferentia2 machine learning inference chip provides up to 50% more performance per watt and can reduce costs by up to 50% against comparable instances.

This is good news for our customers and the environment. The faster we can handle training, the less power is consumed. We offer different types of chips, and we share with customers the power profile and performance so they can choose the right chip for the right workload, and optimize for the lowest possible power consumption at the lowest cost.

What tasks are Trainium and Inferentia already helping Amazon and its customers accomplish?

AWS customers have been using Trainium and Inferentia, and here are a few examples. Finch Computing, an AWS customer, develops natural language processing technology to provide customers with the ability to uncover insights from huge volumes of text data. Using Inferentia for language translation allowed the company to reduce inference costs by 80%. Dataminr, which detects high-impact events and emerging risks for corporate and government customers, achieved nine times better throughput per dollar for AI models optimized for Inferentia. Closer to home, Inferentia allows Alexa to run much more advanced machine learning algorithms at lower costs and with lower latency than a standard general-purpose chip. And we are using Trainium to improve the customer shopping experience, training large language models that include text, images, multiple languages, and locales, as well as different entities such as products, queries, brands, and reviews.

What’s next for Trainium and Inferentia?

AWS will continue to innovate on behalf of our customers and provide access to the most advanced technologies while improving performance cost-efficiency and sustainability. We will collaborate with partners like Anthropic on development of future generations of the Trainium and Inferentia technology.