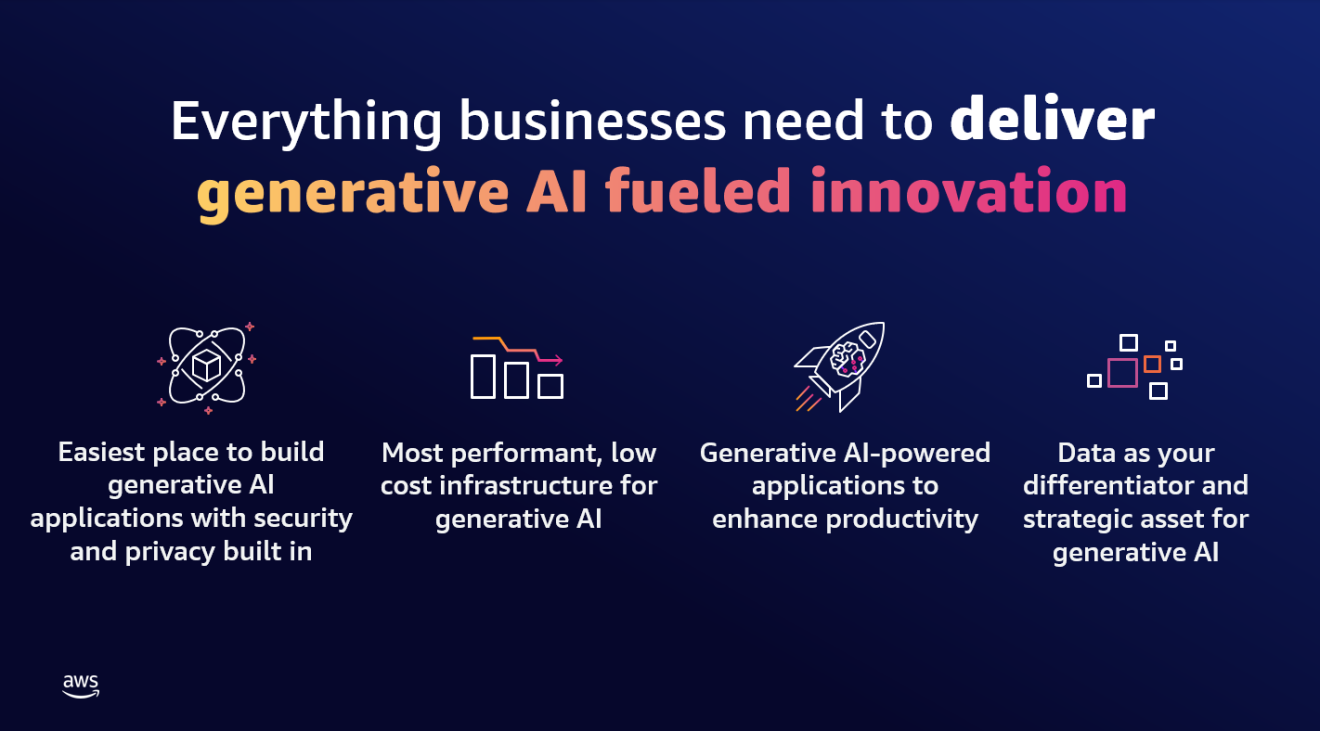

Amazon Web Services (AWS) announced five generative artificial intelligence (generative AI) innovations, so organizations of all sizes can build new generative AI applications, enhance employee productivity, and transform businesses.

“Over the last year, the proliferation of data, access to scalable compute, and advancements in machine learning have led to a surge of interest in generative AI, sparking new ideas that could transform entire industries and reimagine how work gets done,” said Swami Sivasubramanian, vice president of Data and AI at AWS. “Today’s announcement is a major milestone that puts generative AI at the fingertips of every business, from startups to enterprises, and every employee, from developers to data analysts. With powerful, new innovations AWS is bringing greater security, choice, and performance to customers, while also helping them to tightly align their data strategy across their organization, so they can make the most of the transformative potential of generative AI.”

Here is all the news from the AWS announcement. For a deeper technical dive, head to the AWS Machine Learning blog.

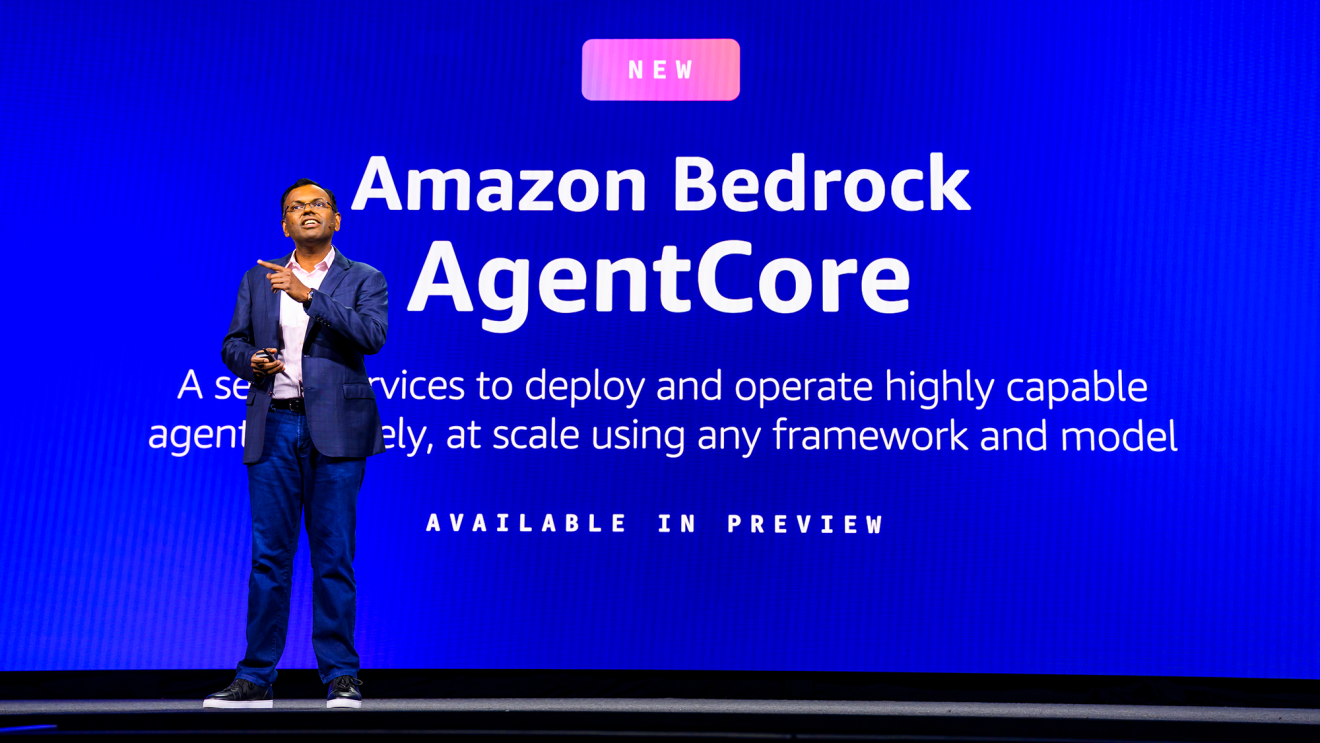

1. Amazon Bedrock is now generally available to help more customers build and scale generative AI applications

Announced in April, Amazon Bedrock is a fully managed service that makes foundation models (FMs) from leading AI companies available through a single application programming interface (API). FMs are very large machine learning (ML) models that are pre-trained on vast amounts of data. The flexibility of FMs makes them applicable to a wide range of use cases, powering everything from search to content creation to drug discovery. However, a few things stand in the way of most businesses looking to adopt generative AI. First, they need a straightforward way to find and access high-performing FMs that give outstanding results and are best suited to their purposes. Second, customers want application integration to be seamless, without managing huge clusters of infrastructure or incurring large costs. Finally, customers want easy ways to use the base FM and build differentiated apps with their data. Since the data customers want for customization is incredibly valuable intellectual property, it must stay completely protected, secure, and private during that process, and customers want control over how the data is shared and used.

With Amazon Bedrock’s comprehensive capabilities, customers can easily experiment with a variety of top FMs and customize them privately with their proprietary data. Additionally, Amazon Bedrock offers differentiated capabilities like creating managed agents that execute complex business tasks—from booking travel and processing insurance claims to creating ad campaigns and managing inventory—without writing any code. Since Amazon Bedrock is serverless, customers do not have to manage any infrastructure, and they can securely integrate and deploy generative AI capabilities into their applications using the AWS services they are already familiar with. Built with security and privacy in mind, Amazon Bedrock makes it easy for customers to protect sensitive data.

2. Amazon Titan Embeddings now generally available

Amazon Titan FMs are a family of models created and pre-trained by AWS on large datasets, making them powerful, general purpose capabilities to support a variety of use cases. The first of these models generally available to customers, Amazon Titan Embeddings is a large language model (LLM) that converts text into numerical representations called embeddings to power search, personalization, and Retrieval-Augmented Generation (RAG) use cases. Now, the next obvious question is, why would I want to do that?

FMs are well suited to a wide variety of tasks, but they can only respond to questions based on learnings from the training data and contextual information in a prompt, limiting their effectiveness when responses require timely knowledge or proprietary data. To augment FM responses with additional data, many organizations turn to RAG, a technique where the FM connects to a knowledge source that it can reference to augment its responses. But deploying RAG requires vast amounts of data and deep ML expertise, putting RAG out of reach for many organizations. Enter Amazon Titan Embeddings.

Amazon Titan Embeddings makes it easier for customers to start with RAG to extend the power of any FM using their proprietary data. Amazon Titan Embeddings supports more than 25 languages and a context length of up to 8,000 tokens (the longer the context length, the better a model can understand dialogue or text and generate a correct response) making it well suited to work with single words, phrases, or entire documents based on the customer’s use case.

3. Meta’s Llama 2 coming in the next few weeks

No single model is optimized for every use case, and to unlock the value of generative AI, customers need access to a variety of models to discover what works best based on their needs. That is why Amazon Bedrock makes it easy for customers to find and test a selection of leading FMs, including models from AI21 Labs, Anthropic, Cohere, Stability AI, Amazon—and in the next few weeks Meta.

Amazon Bedrock is the first fully managed generative AI service to offer Llama 2, Meta’s next-generation LLM, through a managed API. Llama 2 models come with significant improvements over the original Llama models, including being trained on 40% more data and having a longer context length of 4,000 tokens to work with larger documents. Optimized to provide a fast response on AWS infrastructure, the Llama 2 models available via Amazon Bedrock are ideal for dialogue use cases.

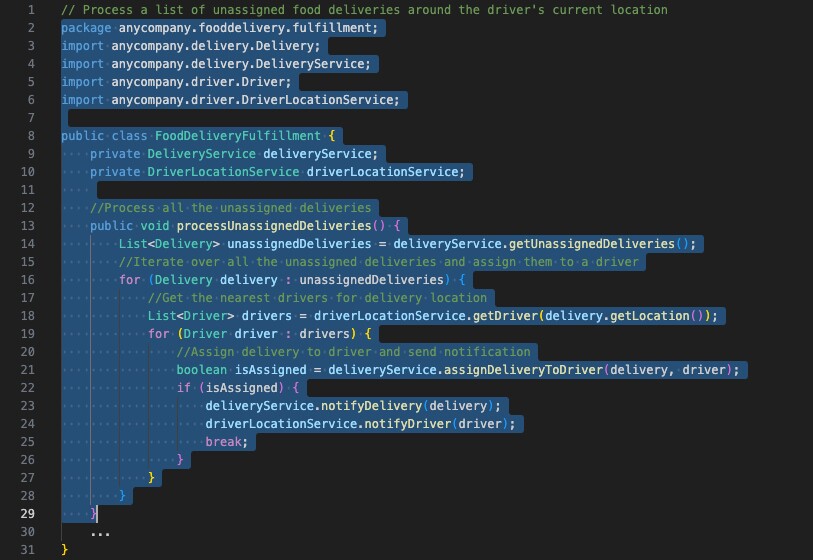

4. New Amazon CodeWhisperer capability—coming soon—will allow customers to securely customize CodeWhisperer suggestions using their private code base to unlock new levels of developer productivity

Trained on billions of lines of Amazon and publicly available code, Amazon CodeWhisperer is an AI-powered coding companion that improves developer productivity. While developers frequently use CodeWhisperer for day-to-day work, they sometimes need to incorporate their organization’s internal, private code base (e.g., internal APIs, libraries, packages, and classes) into an application, none of which are included in CodeWhisperer’s training data. However, internal code can be difficult to work with because documentation may be limited, and there are no public resources or forums where developers can ask for help.

Amazon CodeWhisperer’s new customization capability will unlock the full potential of generative AI-powered coding by securely leveraging a customer’s internal codebase and resources to provide recommendations that are customized to their unique requirements. Developers save time through improved relevancy of code suggestions across a range of tasks. To start, an administrator connects to their private code repository from a source, such as GitLab or Amazon Simple Storage Service (Amazon S3), and schedules a job to create their own customization. Built with enterprise-grade security and privacy in mind, the capability keeps customizations completely private, and the underlying FM powering CodeWhisperer does not use the customizations for training, protecting customers’ valuable intellectual property. This customization capability will be available to customers in preview within the next few weeks as part of a new CodeWhisperer Enterprise Tier.

5. New generative BI authoring capabilities in Amazon QuickSight help business analysts easily create and customize visuals using natural-language commands

Amazon QuickSight is a unified business intelligence (BI) service built for the cloud that offers interactive dashboards, paginated reports, and embedded analytics, plus natural-language querying capabilities using QuickSight Q, ensuring that every user in the organization can access insights they need in the format they prefer. Business analysts often spend hours with BI tools exploring disparate data sources, adding calculations, and creating and refining visualizations before providing them in dashboards to business stakeholders. To create a single chart, an analyst must first find the correct data source, identify the data fields, set up filters, and make necessary customizations to ensure the visual is compelling. If the visual requires a new calculation (e.g., year-to-date sales), the analyst must identify the necessary reference data and then create, verify, and add the visual to the report. Organizations would benefit from reducing the time that business analysts spend manually creating and adjusting charts and calculations so that they can devote more time to higher-value tasks.

The new Generative BI authoring capabilities extend the natural-language querying of QuickSight Q beyond answering well-structured questions (e.g., “what are the top 10 products sold in California?”) to help analysts quickly create customizable visuals from question fragments (e.g., “top 10 products”), clarify the intent of a query by asking follow-up questions, refine visualizations, and complete complex calculations. Business analysts simply describe the desired outcome, and QuickSight generates compelling visuals that can be easily added to a dashboard or report with a single click.

6. New free generative AI training for Amazon Bedrock

We continue adding to our collection of digital, on-demand training courses that empower learners of all backgrounds and knowledge levels to begin using generative AI. Today, we’ve launched Amazon Bedrock—Getting Started, a free self-paced digital course introducing learners to the service. This one-hour course will introduce developers and technical audiences to Amazon Bedrock's benefits, features, use cases, and technical concepts.