Key takeaways

- Trainium3 UltraServers deliver high performance for AI workloads with up to 4.4x more compute performance, 4x greater energy efficiency, and almost 4x more memory bandwidth than Trainium2 UltraServers—enabling faster AI development with lower operational costs.

- Trn3 UltraServers scale up to 144 Trainium3 chips, delivering up to 362 FP8 PFLOPs with 4x lower latency to train larger models faster and serve inference at scale.

- Customers including Anthropic, Karakuri, Metagenomi, NetoAI, Ricoh, and Splash Music are reducing training and inference costs by up to 50% with Trainium, while Decart is achieving 4x faster inference for real-time generative video at half the cost of GPUs, and Amazon Bedrock is already serving production workloads on Trainium3.

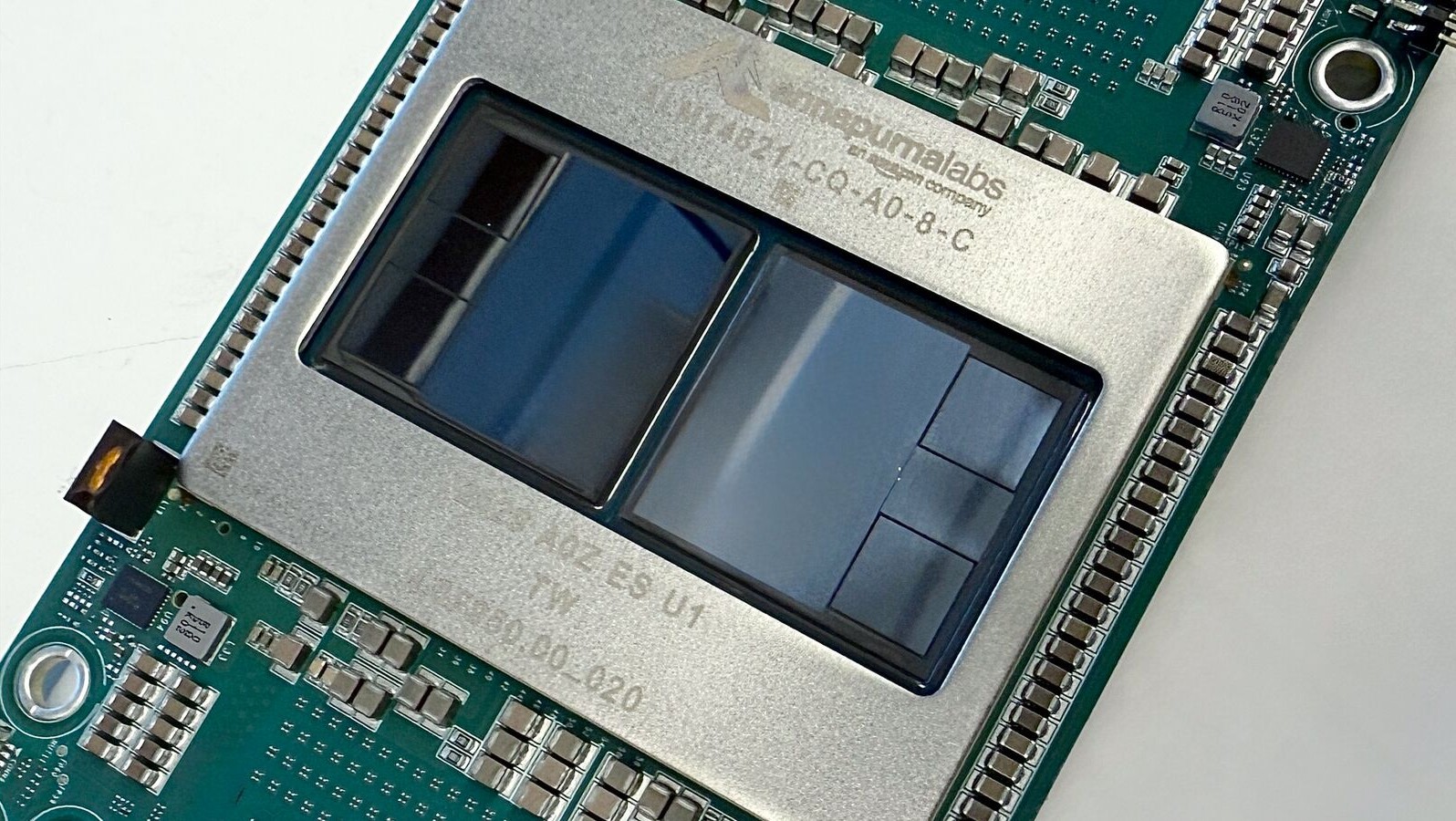

As AI models grow in size and complexity, they are pushing the limits of compute and networking infrastructure, with customers seeking to reduce training times and inference latency—the time between when an AI system receives an input and generates the corresponding output. Training cutting-edge models now requires infrastructure investments that only a handful of organizations can afford, while serving AI applications at scale demands compute resources that can quickly spiral out of control. Even with the fastest accelerated instances available today, simply increasing cluster size fails to yield faster training time due to parallelization constraints, while real-time inference demands push single-instance architectures beyond their capabilities. To help customers overcome these constraints, today we announced the general availability of Amazon EC2 Trn3 UltraServers. Powered by the new Trainium3 chip built on 3nm technology, Trn3 UltraServers enable organizations of all sizes to train larger AI models faster and serve more users at lower cost—democratizing access to the compute power needed for tomorrow's most ambitious AI projects.

Trainium3 UltraServers: Purpose-built for next-generation AI workloads

Trn3 UltraServers pack up to 144 Trainium3 chips into a single integrated system, delivering up to 4.4x more compute performance than Trainium2 UltraServers. This allows you to tackle AI projects that were previously impractical or too expensive by training models faster, cutting time from months to weeks, serving more inference requests from users simultaneously, and reducing both time-to-market and operational costs.

In testing Trn3 UltraServers using OpenAI's open weight model GPT-OSS, customers can achieve 3x higher throughput per chip while delivering 4x faster response times than Trn2 UltraServers. This means businesses can scale their AI applications to handle peak demand with less infrastructure footprint, directly improving user experience while reducing the cost per inference request.

These improvements stem from Trainium3's purpose-built chip. The chip achieves breakthrough performance through advanced design innovations, optimized interconnects that accelerate data movement between chips, and enhanced memory systems that eliminate bottlenecks when processing large AI models. Beyond raw performance, Trainium3 delivers substantial energy savings—40% better energy efficiency compared to previous generations. This efficiency matters at scale, enabling us to offer more cost-effective AI infrastructure while reducing environmental impact across our data centers.

Advanced networking infrastructure engineered for scale

AWS engineered the Trn3 UltraServer as a vertically integrated system—from the chip architecture to the software stack. At the heart of this integration is networking infrastructure designed to eliminate the communication bottlenecks that typically limit distributed AI computing. The new NeuronSwitch-v1 delivers 2x more bandwidth within each UltraServer, while enhanced Neuron Fabric networking reduces communication delays between chips to just under 10 microseconds.

Tomorrow's AI workloads—including agentic systems, mixture-of-experts (MoEs), and reinforcement learning applications—require massive amounts of data to flow seamlessly between processors. This AWS-engineered network enables you to build AI applications with near-instantaneous responses that were previously impossible, unlocking new use cases like real-time decision systems that process and act on data instantly, and fluid conversational AI that responds naturally without lag.

For customers who need to scale, EC2 UltraClusters 3.0 can connect thousands of UltraServers containing up to 1 million Trainium chips—10x the previous generation—giving you the infrastructure to train the next generation of foundation models. This scale enables projects that simply weren't possible before, from training multimodal models on trillion-token datasets to running real-time inference for millions of concurrent users.

Customers already seeing results at frontier scale

Customers are already seeing significant value from Trainium, with companies like Anthropic, Karakuri, Metagenomi, NetoAI, Ricoh, and Splash Music reducing their training costs by up to 50% compared to alternatives. Amazon Bedrock, AWS's managed service for foundation models, is already serving production workloads on Trainium3, demonstrating the chip's readiness for enterprise-scale deployment.

Pioneering AI companies including Decart, an AI lab specializing in efficient, optimized generative AI video and image models that power real-time interactive experiences, are leveraging Trainium3's capabilities for demanding workloads like real-time generative video, achieving 4x faster frame generation at half the cost of GPUs. This makes compute-intensive applications practical at scale—enabling entirely new categories of interactive content, from personalized live experiences to large-scale simulations. With Project Rainier, AWS collaborated with Anthropic to connect more than 500,000 Trainium2 chips into the world's largest AI compute cluster—five times larger than the infrastructure used to train Anthropic's previous generation of models. Trainium3 builds on this proven foundation, extending the UltraCluster architecture to deliver even greater performance for the next generation of large-scale AI compute clusters and frontier models.

Looking ahead to the next generation of Trainium

We are already working on Trainium4, which is being designed to bring significant performance improvements across all dimensions, including at least 6x the processing performance (FP4), 3x the FP8 performance, and 4x more memory bandwidth to support the next generation of frontier training and inference. Combined with continued hardware and software optimizations, you can expect performance gains that scale well beyond baseline improvements. The 3x FP8 performance improvement in Trainium4 represents a foundational leap—you can train AI models at least three times faster or run at least three times more inference requests, with additional gains realized through ongoing software enhancements and workload-specific optimizations. FP8 is the industry-standard precision format that balances model accuracy with computational efficiency for modern AI workloads.

To deliver even greater scale-up performance, Trainium4 is being designed to support NVIDIA NVLink Fusion high-speed chip interconnect technology. This integration will enable Trainium4, Graviton, and Elastic Fabric Adapter (EFA) to work together seamlessly within common MGX racks, providing you with a cost-effective, rack-scale AI infrastructure that supports both GPU and Trainium servers. The result is a flexible, high-performance platform optimized for demanding AI model training and inference workloads.

Additional resources:

- AWS AI Blog

- Documentation

- See how customers are using Trainium

- Get started with Trainium

Get the latest news from AWS re:Invent, including all things agentic and generative AI, product and service announcements, and more.

Trending news and stories

- A city in the palm of your hand: Exploring the intricate world of an Amazon Web Services chip

- Amazon CEO Andy Jassy explains the newly announced long-term strategic partnership with OpenAI

- Amazon CEO Andy Jassy explains the benefits to developers on the Stateful Runtime Environment that Amazon and OpenAI will co-create

- OpenAI and Amazon announce strategic partnership