Key takeaways

- AWS expects compelling AI agent use cases to emerge in 2026, even as specific applications remain difficult to predict.

- AI inference represents a building block of computing that enables entirely new applications.

- The biggest enterprise value comes from agents that accomplish tasks, not just summarize content.

AI has already transformed how we create and summarize content, from work emails to movie scripts. Now, AWS CEO Matt Garman sees AI entering a new phase. Speaking at AWS re:Invent 2025, he told more than 60,000 attendees that the technology is shifting from answering questions to accomplishing tasks—and that shift represents a far bigger unlock for enterprise value.

The distinction comes down to what AI actually does. Content summarization and creation deliver value, but task accomplishment delivers exponentially more. "It's not just ‘summarize what happened,’ but ‘go process my insurance claims,’" Garman said in an interview on the Acquired podcast. That shift is reshaping how enterprises think about where to invest in artificial intelligence.

What makes this transformation possible is AI inference—a computing capability where you run a model against real-world data, allowing it to generate content, make predications, take actions, and more. Inference is the engine that powers AI agents, and Garman believes it represents a fundamental breakthrough in what developers can build.

Developers can use AI agents to build in new ways

Garman describes AI inference as a fundamental new building block in computing. "The world invented a new Lego," Garman said.

Before inference, developers had compute, storage, and databases as their core building blocks—but none of these could independently make decisions or take actions on behalf of users.

"Inference is one of those new building blocks," Garman explained. In other words, developers can now build applications that don't just retrieve information but actually complete work.

To make this vision practical, AWS announced new innovations in Amazon Bedrock AgentCore—a complete set of services that enables organizations to deploy and operate AI agents—at re:Invent. AWS also introduced three frontier agents—Kiro autonomous agent, AWS Security Agent, and AWS DevOps Agent—that exemplify this shift from assistance to autonomous task completion.

Where AI agents fit in the stack

To understand where agents create value, Garman describes a three-layer model.

At the bottom sits cloud infrastructure—the foundational compute, storage, and networking. The middle layer contains services like databases and analytics engines. The top layer is where millions of applications live, from enterprise software to consumer apps.

AI agents belong in that top layer—think Amazon Quick—built on the fundamental platforms like Amazon Bedrock AgentCore, which provide secure compute environments where agents can run, gateways that control permissions between agents, and tools that let agents interact with other systems.

"All these building blocks to build the agents on—we think that's somewhere in that middle layer," Garman said. But the applications leveraging inference will number in the millions, created by developers who see opportunities to automate tasks that previously required human intervention.

Why task accomplishment matters more than content generation

The first wave of generative AI focused on content creation and summarization—writing emails, summarizing documents, generating reports. These capabilities are valuable, but they're limited in how much operational value they deliver.

Task-accomplishing agents represent a different category of value creation.

Take an insurance claims processing agent built by a life insurance company. A content-generation AI might summarize a claim or draft a response letter. A task-accomplishing agent could review the claim against policy terms, cross-reference medical records, calculate payment amounts, flag exceptions for human review, and process approved claims end-to-end.

This applies across industries. In software development, agentic AI can understand requirements, write code across multiple repositories, run tests, and submit pull requests. In operations, an agent can detect anomalies, diagnose root causes, and implement fixes.

The operational leverage from agents that complete work—rather than just assist with it—is what Garman believes will drive massive enterprise adoption.

For business leaders charting their AI strategies, this distinction matters: Investments in agents that automate end-to-end workflows will deliver exponentially more ROI than tools that simply help employees work faster. The question isn't whether to adopt AI agents, but where to deploy them for maximum operational impact.

What's ahead for agentic AI

Looking to 2026, Garman predicts widespread enterprise value creation from agents, though he acknowledges the specific use cases will likely surprise us. That uncertainty is part of what Garman finds exciting about providing infrastructure—rather than trying to predict every application.

"That's part of what I love about AWS," Garman said. "We build these building blocks, we give this technology, and then we let the world have the creativity to go find some really cool things."

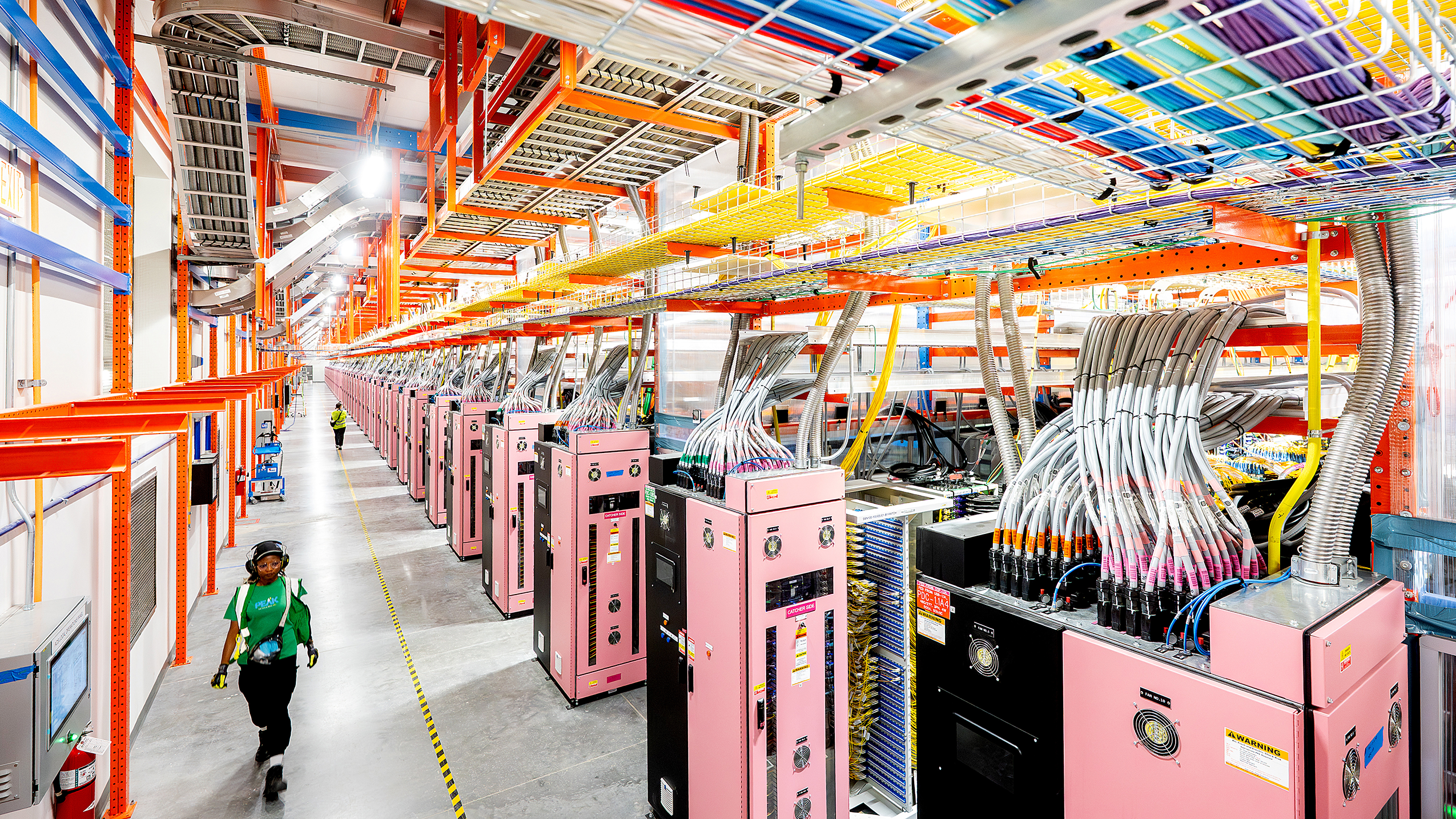

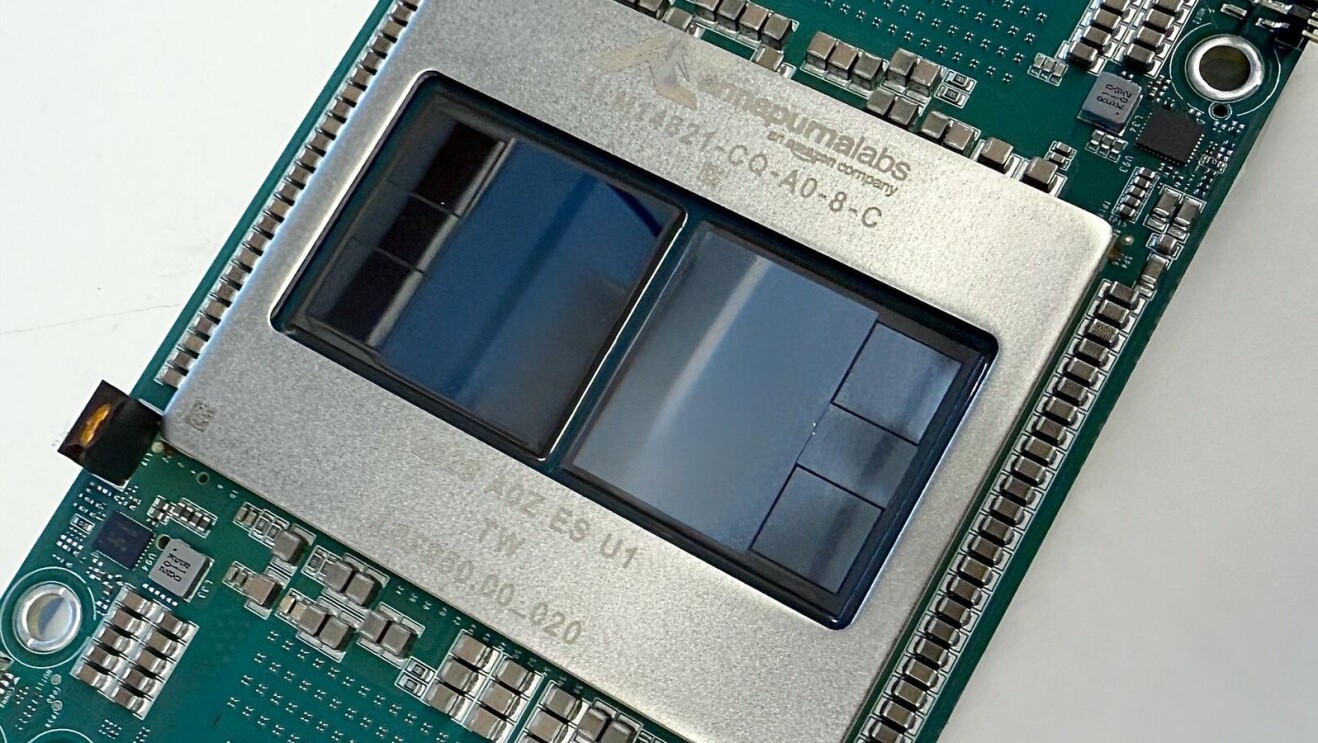

As companies move from AI experimentation to production deployment, AWS is playing across the entire stack—from foundational infrastructure like Graviton5 processors (delivering 25% higher performance) and Trainium3 UltraServers (enabling faster AI model training) to the building blocks that enable developers to create agent-powered applications.

For enterprises evaluating where to invest in AI, the framework is clear: Look for opportunities where agents can accomplish tasks autonomously, not just assist with content creation. Companies ready to explore AI agents can start with Amazon Bedrock AgentCore, which provides pre-built components for building, deploying, and managing agents at scale.

Next, read more about all the key announcements that came from AWS re:Invent 2025.