Key takeaways

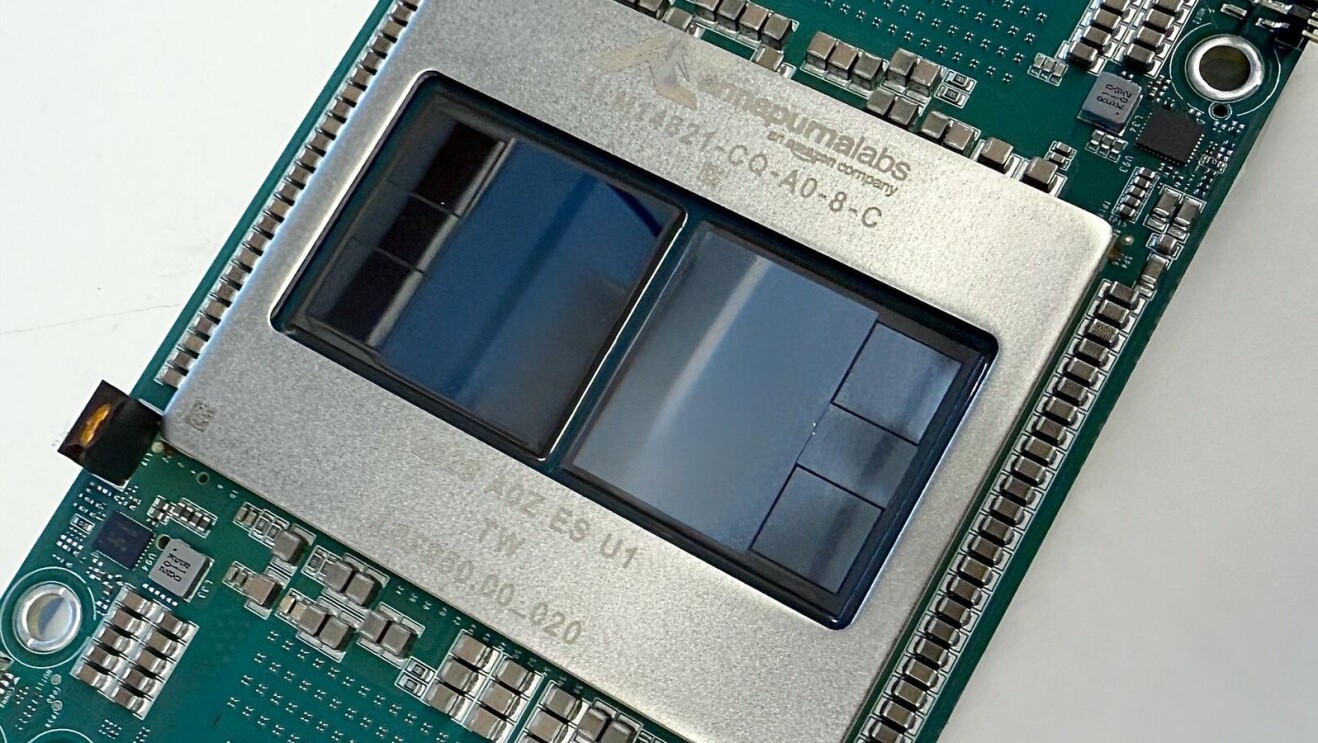

- AWS AI Factories deliver dedicated infrastructure combining the latest NVIDIA accelerated computing platform, Trainium chips, AWS AI services, and AWS high-speed, low-latency networking.

- Customers can leverage their existing data center space, network connectivity, and power while AWS handles the complexity of deployment and management of the integrated infrastructure.

- AWS AI Factories help enterprises and public sector organizations meet their data sovereignty and regulatory requirements, with accelerated deployment timelines.

As governments and large organizations seek to scale AI projects, some are turning to the concept of an “AI factory” to address their unique sovereignty and compliance needs. But building a high-performance AI factory requires a comprehensive set of management, database, storage, and security services—complexity that few customers want to take on themselves. To address this need, today we announced AWS AI Factories, a new offering that provides enterprises and governments with dedicated AWS AI infrastructure deployed in their own data centers.

AWS AI Factories combine the latest AI accelerators, including cutting-edge NVIDIA AI computing and Trainium chips, AWS high-speed, low-latency networking, high-performance storage and databases, security, and energy-efficient infrastructure, together with comprehensive AI services like Amazon Bedrock and SageMaker AI so customers can rapidly develop and deploy AI applications at scale.

Organizations in regulated industries and the public sector face a critical AI infrastructure challenge in getting their large-scale AI projects deployed. Building their own AI capabilities requires massive capital investments in GPUs, data centers, and power, plus navigating complex procurement cycles, selecting the right AI model for their use case, and licensing models from different AI providers. This creates multi-year timelines and operational complexity that diverts focus from their core business goals.

AWS AI Factories address this challenge by deploying dedicated AWS AI infrastructure in customers’ own data centers, operated exclusively for them. AWS AI Factories operate like a private AWS Region that gives secure, low-latency access to compute, storage, database, and AI services. This approach lets you leverage existing data center space and power capacity you’ve already acquired and gives access to AWS AI infrastructure and services—from the latest AI chips for training and inference to tools for building, training, and deploying AI models. It also provides managed services that offer access to leading foundation models without having to negotiate separate contracts with model providers—all while helping you meet security, data sovereignty, and regulatory requirements for where data is processed and stored.

Leveraging nearly two decades of cloud leadership and unmatched experience in architecting large-scale AI systems, we are able to deploy secure, reliable AI infrastructure faster than most organizations can on their own, saving years of buildout effort and managing operational complexity.

AWS and NVIDIA expand collaboration to accelerate customer AI infrastructure deployments

The relationship between AWS and NVIDIA goes back 15 years, to when we launched the world’s first GPU cloud instance, and today we offer the widest range of GPU solutions for customers. Building on our longstanding collaboration to deliver advanced AI infrastructure, AWS and NVIDIA make it possible for customers to build and run large language models faster, at scale, and more securely than anywhere else—now in your own data centers. With the NVIDIA-AWS AI Factories integration, AWS customers have seamless access to the NVIDIA accelerated computing platform, full-stack NVIDIA AI software, and thousands of GPU-accelerated applications to deliver high performance, efficiency, and scalability for building next-generation AI solutions. We continue to bring the best of our technologies together. The AWS Nitro System, Elastic Fabric Adapter (EFA) petabit-scale networking, and Amazon EC2 UltraClusters support the latest NVIDIA Grace Blackwell and the next-generation NVIDIA Vera Rubin platforms. In the future, AWS will support NVIDIA NVLink Fusion high-speed chip interconnect technology in next-generation Trainium4 and Graviton chips, and in the Nitro System. This integration makes it possible for customers to accelerate time to market and achieve better performance.

“Large-scale AI requires a full-stack approach—from advanced GPUs and networking to software and services that optimize every layer of the data center. Together with AWS, we’re delivering all of this directly into customers’ environments,” said Ian Buck, vice president and general manager of Hyperscale and HPC at NVIDIA. “By combining NVIDIA’s latest Grace Blackwell and Vera Rubin architectures with AWS’s secure, high-performance infrastructure and AI software stack, AWS AI Factories allow organizations to stand up powerful AI capabilities in a fraction of the time and focus entirely on innovation instead of integration.”

Helping the public sector accelerate AI adoption

AWS AI Factories are built to meet AWS's rigorous security standards of providing governments with the confidence to run their most sensitive workloads across all classification levels: Unclassified, Sensitive, Secret, and Top Secret. AWS AI Factories will also provide governments around the world with the availability, reliability, security, and control they need to help their own economies advance and take advantage of the benefits of AI technologies.

AWS and NVIDIA are collaborating on a strategic partnership with HUMAIN, the global company based in Saudi Arabia building full-stack AI capabilities, with AWS building a first-of-its-kind "AI Zone" in Saudi Arabia featuring up to 150,000 AI chips, including GB300 GPUs, dedicated AWS AI infrastructure, and AWS AI services, all within a HUMAIN purpose-built data center. “The AI factory AWS is building in our new AI Zone represents the beginning of a multi-gigawatt journey for HUMAIN and AWS. From inception, this infrastructure has been engineered to serve both the accelerating local and global demand for AI compute,” said Tareq Amin, CEO of HUMAIN. “What truly sets this partnership apart is the scale of our ambition and the innovation in how we work together. We chose AWS because of their experience building infrastructure at scale, enterprise-grade reliability, breadth of AI capabilities, and depth of commitment to the region. Through a shared commitment to global market expansion, we are creating an ecosystem that will shape the future of how AI ideas can be built, deployed, and scaled for the whole world.”

Get the latest news from AWS re:Invent, including all things agentic and generative AI, product and service announcements, and more.

Trending news and stories

- Amazon increases investment in Spain to €33.7 billion to expand data center infrastructure and drive AI innovation across Europe

- Amazon CEO Andy Jassy explains the newly announced long-term strategic partnership with OpenAI

- Amazon CEO Andy Jassy explains the benefits to developers on the Stateful Runtime Environment that Amazon and OpenAI will co-create

- OpenAI and Amazon announce strategic partnership